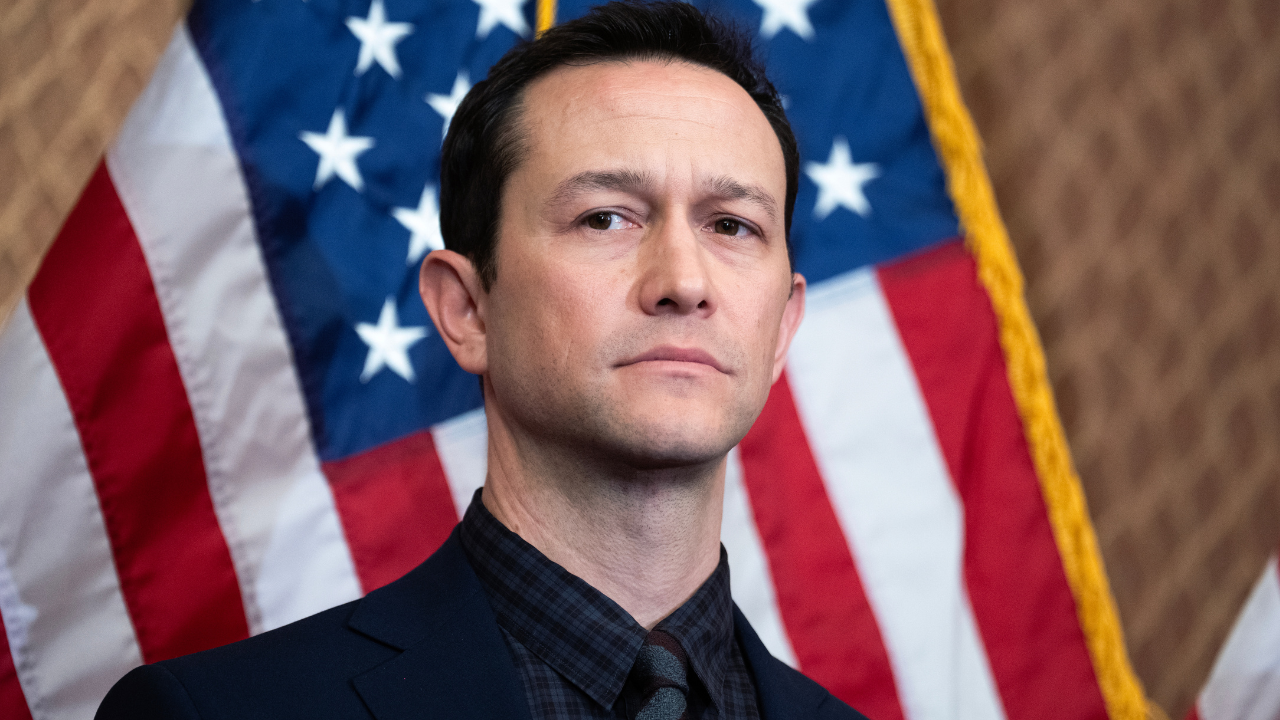

Actor and filmmaker Joseph Gordon-Levitt joined parents on Capitol Hill to advocate for the repeal of Section 230, a law that shields tech companies from liability for user-generated content. Gordon-Levitt argued that this legal protection allows “amoral companies” to prioritize profits over public safety, citing the tragic stories of children lost to online abuse, including sextortion and cyberbullying. He expressed his fervent hope for bipartisan support to dismantle this decades-old shield, emphasizing the urgent need for accountability and change to prevent further harm to children.

Read the original article here

It’s concerning to hear about the alarming rise of sextortion and threats targeting children online, and the calls for significant internet reform stemming from these issues are certainly warranted. The focus on Big Tech’s role in these problems highlights a critical juncture where the platforms that host so much of our digital lives are being held accountable for the harms facilitated within them. This isn’t just about isolated incidents; it’s about systemic vulnerabilities that allow predators to exploit children and create environments of fear.

The debate around Section 230 of the Communications Decency Act is central to these discussions. Repealing or significantly altering Section 230, as some are proposing, aims to shift responsibility for user-generated content more directly onto the platforms themselves. The idea is that by making platforms more liable for the content they host, they will be incentivized to implement more robust moderation and safety measures. However, there are significant concerns about the potential unintended consequences of such a drastic change, including the possibility of widespread censorship and the chilling effect it could have on free speech and the operation of many online services as we know them.

Many believe that the current model of the internet, often referred to as Web 2.0, is built on the premise that users are responsible for their own content, and platforms are expected to moderate to the best of their abilities. Without the protections afforded by Section 230, the fear is that platforms would face an onslaught of lawsuits, potentially for any content posted by their users, even if they have taken reasonable steps to moderate. This could lead to an environment where platforms either disengage from moderating altogether, resulting in an unmoderated web, or engage in extreme over-moderation to avoid liability, which could stifle legitimate discourse and diverse viewpoints.

There’s a strong argument that the focus should be on empowering parents as the primary gatekeepers of their children’s online experiences. Since children lack the financial independence to acquire devices or internet access on their own, parents are the ultimate enablers of their online presence. Therefore, implementing robust parental control tools and giving parents complete oversight and moderation capabilities over their children’s accounts and devices is seen as a more direct and effective solution to protect minors. This approach places the responsibility squarely on parents to decide what their children can access and engage with online.

The notion of internet reform driven by politicians also raises a degree of skepticism for many. The concern is that legislative efforts, while potentially well-intentioned, may not fully grasp the complexities of the digital landscape or could be influenced by agendas that go beyond child safety. The fear is that “internet reform” could become a euphemism for increased government control over online discourse, potentially leading to a more restrictive and less open internet, which might not align with the public’s understanding of a free and open digital space.

Moreover, the idea that platforms should simply be forced to censor controversial topics to avoid lawsuits is seen as a dangerous path. This could disproportionately impact journalists and the dissemination of important, albeit sometimes uncomfortable, information. The potential for platforms to err on the side of caution and silence a wide range of voices in an effort to mitigate legal risk is a significant concern, leading to a more sanitized and less informative online environment.

The discussion also touches on the evolving nature of what we call “tech companies.” Some argue that many of these entities have become more akin to media companies, shaping narratives and influencing public opinion in ways similar to traditional media outlets, rather than purely technology providers. This distinction is important when considering regulation and accountability, as it suggests a different set of responsibilities and potential impacts.

Ultimately, the debate surrounding these issues is multifaceted, with valid concerns on all sides. While the desire to protect children from online harms is universal and deeply felt, the proposed solutions and the path to achieving them are subject to intense scrutiny and disagreement, highlighting the profound challenges of regulating a rapidly evolving digital world. The focus needs to be on finding solutions that effectively safeguard children without unduly compromising the open and accessible nature of the internet.