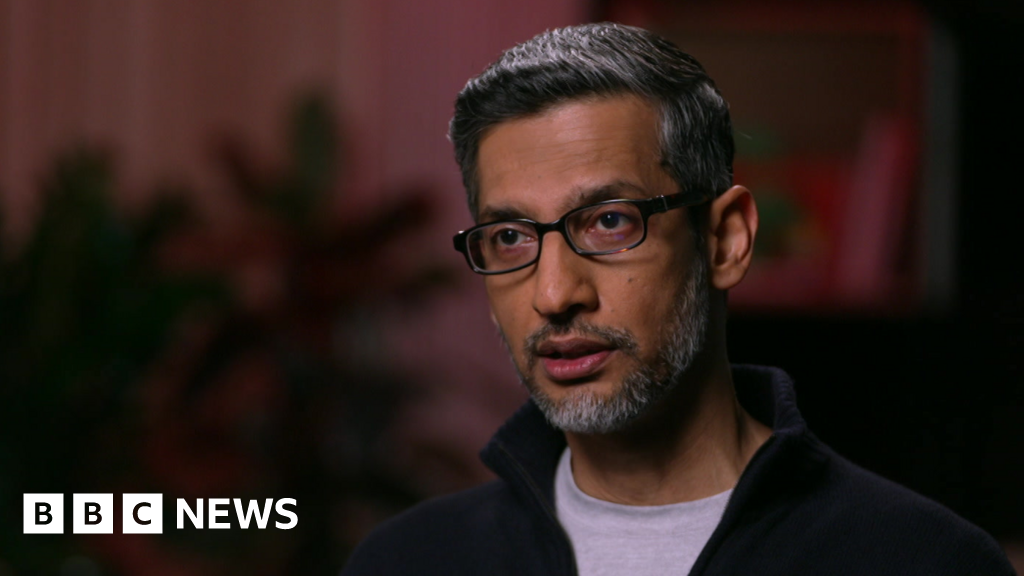

Alphabet CEO Sundar Pichai has expressed concern about the potential for an AI bubble, warning that no company, including Google, would be immune to its bursting. He acknowledged the “irrationality” present in the current AI boom, drawing parallels to the dotcom era, while also highlighting the technology’s profound future impact. Despite this caution, Pichai emphasized Google’s strong position due to its integrated technology stack and the company’s commitment to significant investments in UK AI research and infrastructure. He also noted the immense energy demands of AI, which necessitate addressing energy infrastructure challenges and potentially impacting climate targets.

Read the original article here

Sundar Pichai, the Google boss, warns “no company immune” if the AI bubble bursts, and frankly, that statement is sparking a lot of thought. It feels like a mix of impending doom and a reluctant acceptance of reality. The general vibe is that the AI hype train has been chugging along, fueled by a mixture of ambition, fear of missing out, and perhaps a touch of blind faith. Now, as the pressure builds, the warning bells are starting to ring.

It seems like there’s a collective unease about the potential fallout. The fear isn’t just about a downturn in the market; it’s about the potential for widespread damage to the economy and, consequently, the livelihoods of many. It sounds like the anticipation of a crash is quite high. There’s a strong sense of skepticism towards the CEOs who’ve spearheaded this AI frenzy. People are questioning their judgment, their motives, and their understanding of the true value and sustainability of these AI ventures.

The notion that these companies might need a bailout if the bubble bursts is particularly irritating to many. It seems like the idea of the government stepping in to save companies that may have made risky or short-sighted decisions is not sitting well. The argument is that this sets a dangerous precedent, potentially rewarding failure and discouraging responsible innovation. The consensus seems to be that those who made the bad bets should face the consequences of their actions.

It feels like many people believe the true value of AI is being vastly overstated, and the promise of groundbreaking innovation is clashing with the reality of limited practical applications and high costs. The current reliance on AI is, in the opinion of many, not sustainable. The resources consumed – energy, data, infrastructure – are enormous, and the returns, in terms of tangible benefits, are still often questionable. The feeling is that the benefits of AI are not evenly distributed, with the potential for job displacement and other negative consequences.

The irony of the situation seems clear. The very companies that are pushing AI so aggressively are also seemingly admitting that they’re unsure if it will succeed. They appear to be trapped in a cycle of speculation, hoping for the next big thing, and making decisions based on fear rather than a clear vision. The underlying sentiment is one of frustration and a belief that AI is being used as a way to inflate stock prices and extract profit rather than to improve the lives of the general population.

The concerns about ethical considerations and potential for misuse are also present. The fear of AI being used for plagiarism, manipulation, or surveillance is significant. There is a strong undercurrent of ethical debate that is surrounding the rapid development and deployment of AI technologies. The fear is that the lack of accountability and regulation could lead to widespread harm.

It is also important to note the potential of the consequences of an AI bubble bursting to be selective. The belief is that larger companies, like Google, might survive the crash by consolidating and acquiring smaller, innovative companies. The general sentiment is that a crash might not eliminate AI entirely, it may simply reshape the market and concentrate power within a few dominant players. There is a sense of inevitability here.

The timing of this warning is being scrutinized. Some believe it’s a strategic move to prepare the ground for a potential bailout, while others view it as a genuine acknowledgment of the risks. It’s hard not to wonder if this is more about Google positioning itself to survive the storm, or if there is a more genuine concern for the health of the tech industry. It’s a complex situation with various viewpoints.

Ultimately, the warning from Sundar Pichai highlights the precarious state of the AI industry. It underscores the high stakes involved, the potential for significant disruption, and the need for a more realistic and responsible approach to innovation. Whether this is a prelude to a significant market correction or a call for responsible growth is still up for debate. But one thing is for sure: The warning has sparked a lively discussion about the future of AI. The general consensus appears to be that a reality check is needed, with a heavy dose of skepticism and a clear message: Don’t expect a free pass if this all comes crashing down.