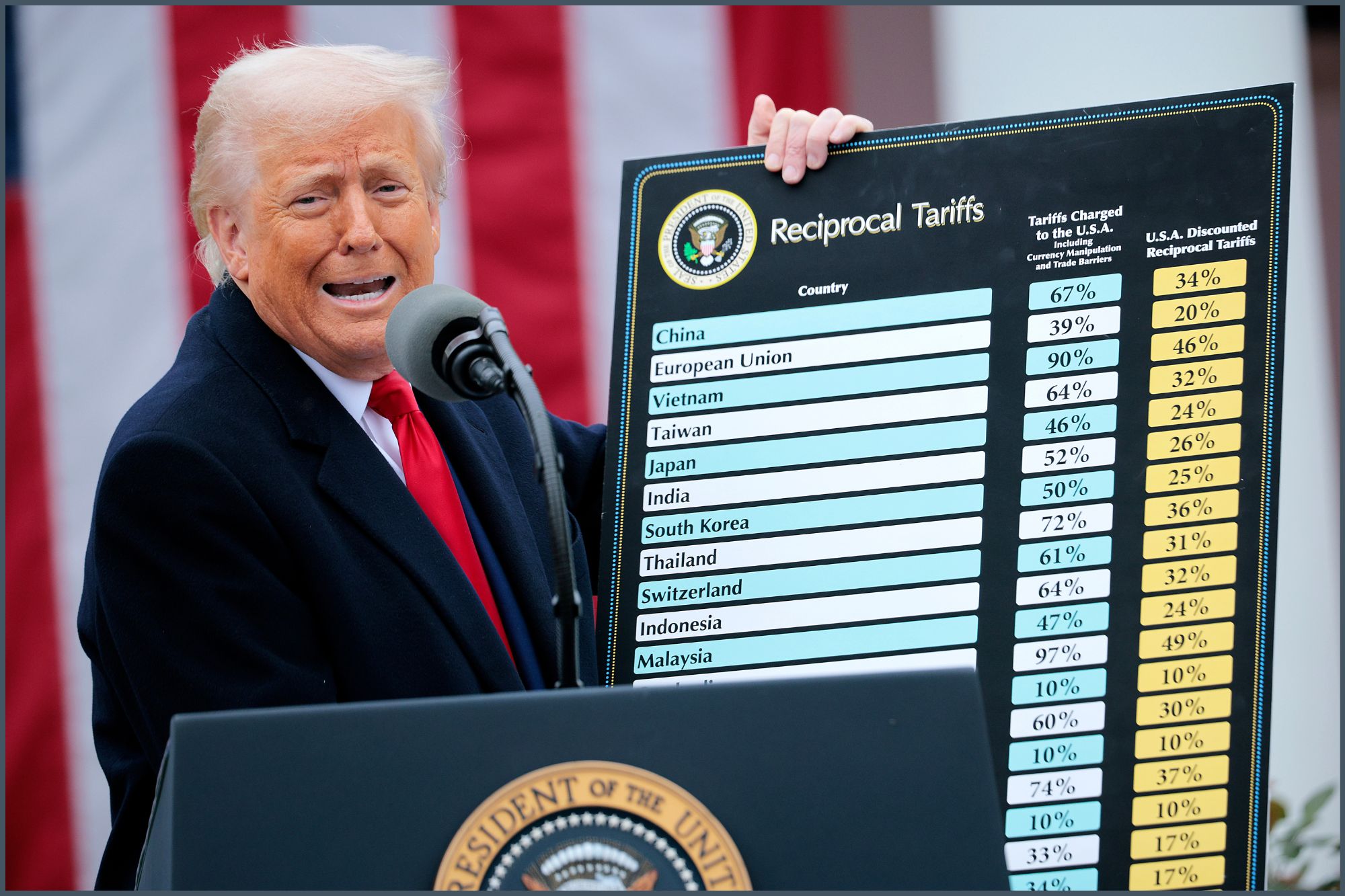

The Trump administration’s newly announced tariff plan is under scrutiny, with online commentators and experts alleging the use of ChatGPT to determine tariff percentages. The proposed tariffs, criticized as nonsensical, appear to be calculated using a simple formula—the greater of 10% or the country’s trade deficit divided by U.S. imports from that country—mirroring a response from ChatGPT to a similar prompt. This methodology, as highlighted by several influencers, is considered flawed and potentially responsible for significant market declines, including a 4%+ drop in the S&P 500 and a 5%+ drop in the Nasdaq. The accusations raise serious concerns about the use of AI in formulating critical economic policy.

Read the original article here

The accusation that a former president used ChatGPT to devise a tariff plan is swirling, fueled by the unsettling ease with which the AI generates similar formulas. This isn’t simply about flawed economic policy; it raises profoundly disturbing questions about the potential for AI to inadvertently shape—or even control—national policy.

The sheer absurdity of the situation is striking. The tariff plan, allegedly generated with the help of AI, inexplicably targets islands with negligible populations, highlighting a profound disconnect between policy and reality. This raises immediate concerns about competence and due diligence. Were proper checks and balances bypassed? Was there any genuine attempt to assess the impact on various economies? The sheer incompetence is breathtaking.

It’s not just about the economic implications, however. The very idea that an AI could so easily generate a policy blueprint suggests a dangerous level of reliance on technology, bypassing human expertise and critical thinking. This raises fundamental questions about accountability and transparency in governance. Who is ultimately responsible for the consequences of AI-generated policies? How can we ensure that such powerful tools aren’t misused or exploited to pursue ill-conceived objectives?

This incident is not isolated. A pattern of questionable decision-making, characterized by a disregard for expertise and a lack of coherent strategy, seems to be emerging. The alleged reliance on AI is merely a symptom of a broader problem—a lack of genuine engagement with the complexities of policy-making. It underscores the urgent need for robust checks and balances within the political system.

The incident further exposes a dangerous trend of relying on AI for quick fixes and simple solutions to complex problems. AI is a tool, but a tool wielded without understanding can lead to disastrous outcomes. This situation exemplifies that danger. Simply inputting data without understanding the context or potential consequences can yield absurd and harmful results. This isn’t a criticism of AI itself; it’s a criticism of the irresponsible way it’s being employed.

There’s a deep irony here. While concerns about AI’s potential to displace human workers are legitimate, the current situation highlights an even more alarming prospect: AI potentially directing and influencing policy decisions without adequate human oversight or accountability. This raises existential questions about the future of governance and the role of human intelligence in the decision-making process. Are we handing over the reins of power without fully grasping the implications?

The whole episode feels surreal, like something ripped from a satirical dystopian novel. The idea of a leader potentially using a chatbot to generate significant policy proposals—a process lacking scrutiny and based on potentially flawed input—is both alarming and deeply unsettling. It highlights the potential for unintended consequences when powerful tools are deployed without proper understanding or oversight.

The reaction to this situation is equally telling. While some focus on the economic repercussions, others rightly see it as a symptom of a much deeper problem: a systemic erosion of accountability and a dangerous concentration of power. The lack of opposition, even in the face of such clearly questionable actions, speaks volumes about the state of political discourse and the willingness to challenge those in power.

The potential long-term implications are staggering. This incident is not an isolated event; it’s potentially a harbinger of a future where technology shapes policy more than human judgment. It reinforces the need for a serious public conversation about the ethical considerations of AI in governance, and the importance of safeguarding against the unchecked power of technology in the political sphere. The question remains: are we sufficiently equipped to navigate this brave new world of AI-influenced policy-making, or are we sleepwalking into a future we barely understand? The answers, unfortunately, seem disturbingly unclear.