German authorities uncovered over 100 dormant websites designed to disseminate disinformation, likely in preparation for the upcoming Bundestag election. These sites, mimicking legitimate news sources, contain AI-generated content and are poised to rapidly spread fabricated stories via social media. This activity mirrors previous instances of foreign interference in European elections, particularly those attributed to Russia. The timing is especially concerning given the AfD’s strong showing in polls.

Read the original article here

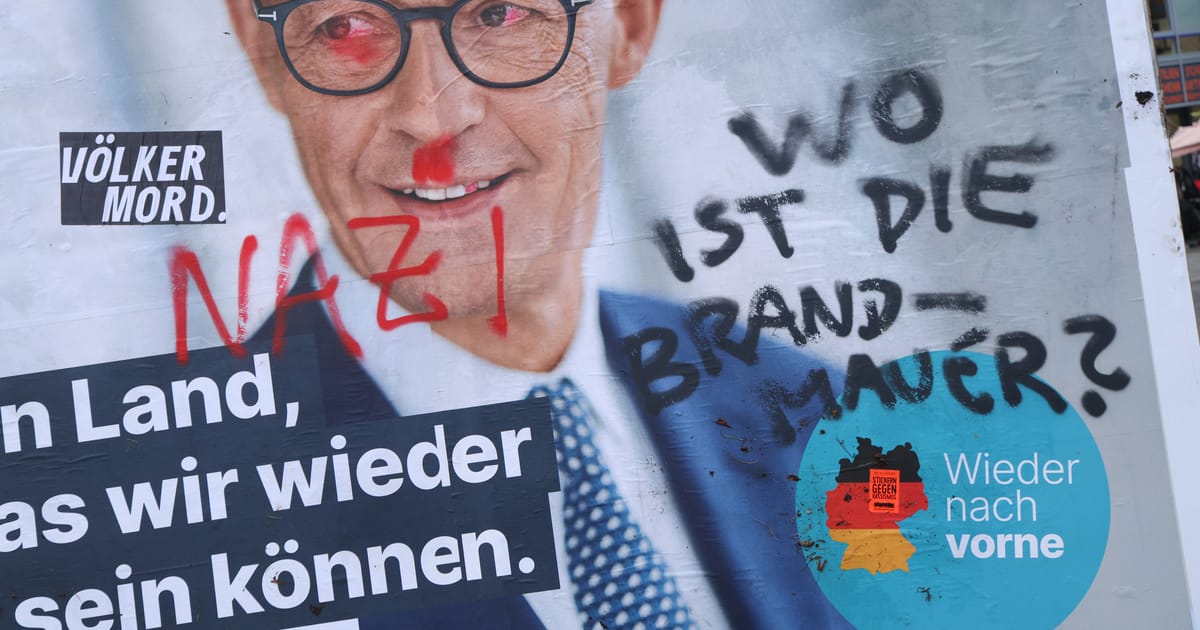

Putin’s disinformation networks are actively flooding German social media platforms in an attempt to sway the upcoming election. This isn’t a new tactic; it’s been successfully employed elsewhere, and the current surge of pro-AfD messaging on platforms like YouTube and Instagram demonstrates its continued effectiveness. The sheer volume of comments pushing specific political agendas, often unrelated to the original post’s content, is alarming. These comments typically come from accounts with generic names, AI-generated profile pictures, or no profile pictures at all, further indicating a coordinated campaign.

The pattern of disinformation has intensified, particularly since the recent coalition breakdown and the announcement of new elections. This suggests a deliberate escalation of the effort to influence the outcome. While the use of bots and AI in spreading misinformation isn’t yet illegal, the scale and organization of this campaign demand a response. Simply blocking Russia’s internet access seems like a drastic, yet possibly necessary step, given the persistent nature of the problem.

The question of how to effectively counter these disinformation campaigns is complex. While information campaigns are often suggested, the sheer volume of disinformation makes it a significant challenge. The idea of deploying counter-bots to fact-check and debunk the misinformation in real-time holds promise, but its feasibility and potential unintended consequences need careful consideration. A more passive approach, focusing on digital literacy and critical thinking skills within the population, is also vital, but this is a long-term solution and may not be enough to counter the immediate threat.

Many propose stronger measures. Severing internet connections from Russia, akin to China’s Great Firewall, is a radical suggestion. However, the implications for freedom of speech and access to information need careful consideration. The alternative is to allow this foreign interference in the democratic process, which many view as an act of war. Some argue that treating election interference as an act of war justifies extreme measures, such as seizing Russian assets and further sanctions. The success of previous disinformation campaigns, like those targeting the American election, raises legitimate concerns about Germany’s vulnerability. The fact that Russia restricts its own citizens’ access to social media while simultaneously flooding other countries with propaganda highlights a blatant hypocrisy.

The challenge lies in finding a response that doesn’t mirror Russia’s authoritarian tactics while effectively countering their disinformation. Simply “fighting fire with fire” by creating our own bot networks risks perpetuating the problem and eroding trust in information. The complexities of free speech, combined with the sophisticated nature of disinformation campaigns, require innovative solutions. Proposals such as identifying and censoring AI-generated content on platforms like X (formerly Twitter) warrant exploration, alongside educating the public to become more discerning consumers of online information.

The issue isn’t solely technological; it’s also political and social. A lack of proactive measures and a reliance on reactive solutions has allowed the problem to fester. The lack of swift and decisive action from governments might indicate a lack of understanding of the seriousness of the threat or, perhaps, even a tacit acceptance of foreign interference in democratic processes. The potential for such interference to undermine electoral legitimacy raises significant questions about the future of democratic governance in a digitally connected world. The long-term consequences of inaction could be far-reaching and damaging. The speed and scale of the disinformation campaigns, combined with the relatively slow response from governing bodies, suggest that more aggressive measures may become necessary to safeguard elections and maintain the integrity of democratic processes. The longer this situation persists, the greater the risk of normalization, leading to a chilling effect on democratic participation.