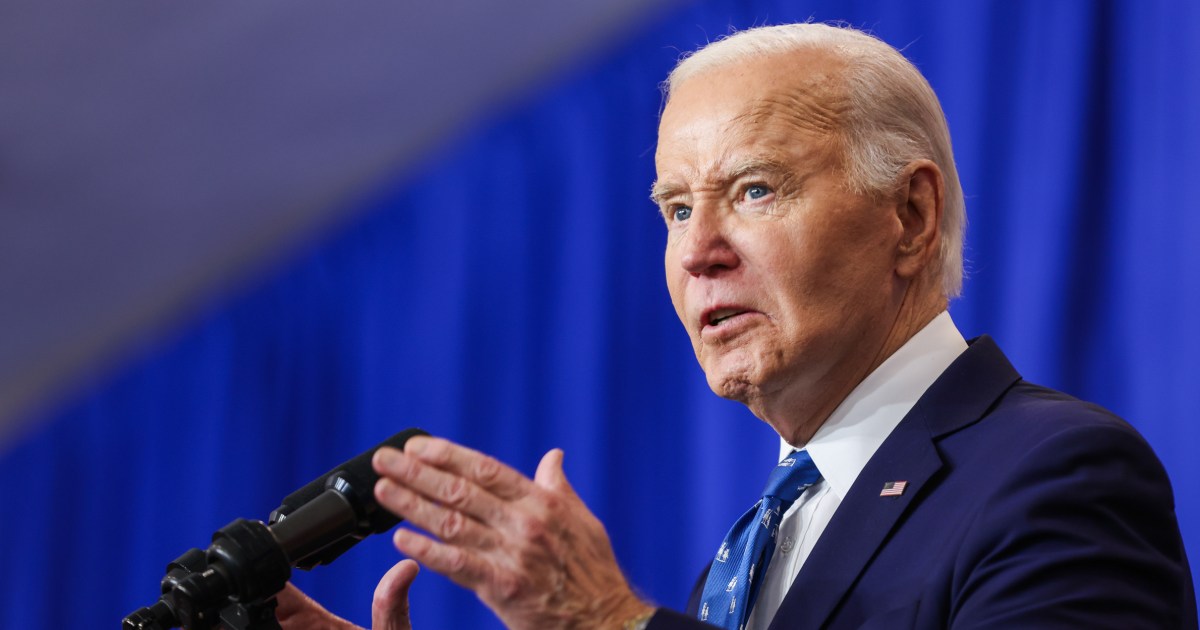

President Biden strongly condemned Meta’s decision to eliminate its fact-checking program, deeming it “shameful” and detrimental to the dissemination of truthful information. This follows Meta CEO Mark Zuckerberg’s announcement, citing pressure from the Biden administration as a contributing factor. Zuckerberg claims the administration’s requests to remove content were excessive and that the new user-driven system will replace fact-checking. Biden’s criticism echoes concerns raised by California Governor Newsom regarding the spread of misinformation.

Read the original article here

President Biden’s characterization of Meta’s decision to end fact-checking as “really shameful” highlights a growing concern about the spread of misinformation in the digital age. This move by Meta, particularly given the current climate of rampant lies and propaganda, raises serious questions about the platform’s responsibility in curating its content and upholding truthfulness. While previous fact-checking efforts may not have been perfect, their complete abandonment leaves a significant gap in combating the spread of falsehoods.

The elimination of fact-checking on Meta’s platforms has been met with widespread criticism, with many accusing the company of prioritizing profits over responsible content moderation. Concerns exist that this decision will further empower the spread of harmful misinformation and conspiracy theories, potentially influencing public opinion and undermining democratic processes. The potential consequences extend far beyond individual users, impacting everything from public health to political discourse.

Critics argue that Meta’s actions demonstrate a lack of corporate social responsibility and a willingness to prioritize profit over ethical considerations. The company’s history, including its role in past controversies, fuels this skepticism. This history casts a long shadow, further undermining public trust in the platform’s commitment to ethical conduct and responsible content moderation. Past failings are seen as evidence of a systemic problem, not isolated incidents.

The decision to end fact-checking is viewed by many as a deliberate move to cater to certain political interests and ideologies. This fuels concerns that the platform will become increasingly biased and susceptible to manipulation. Abandoning fact-checking opens the door to a flood of unchecked and potentially harmful content, thereby creating a dangerous echo chamber for specific viewpoints.

The argument is made that Meta, along with other major tech companies, wields immense power over the flow of information, and that its actions have significant ramifications for society as a whole. Critics question whether these platforms are truly acting as impartial arbiters of information or whether they are actively shaping public discourse for their own benefit. Such immense influence carries a commensurate responsibility, one that many believe Meta has abdicated.

The impact extends beyond politics; the spread of misinformation poses a threat to public health, education, and social cohesion. The consequences of unchecked falsehoods can be severe, leading to harmful behaviors, mistrust in institutions, and ultimately, instability. It’s this broader societal impact that fuels much of the criticism directed at Meta’s decision.

There’s a call to action emerging, urging users to reconsider their relationship with Meta’s platforms. The argument is made that simply expressing disapproval isn’t enough; users must actively demonstrate their discontent through their actions, whether that’s by reducing their use of the platform, deleting accounts, or actively engaging in alternative forms of communication.

Many suggest that abandoning Meta’s platforms is the most effective form of protest. Switching to alternative social media platforms or prioritizing other forms of communication sends a clear message to the company about user expectations and discontent. This action is framed as a powerful way to express disapproval and potentially impact Meta’s bottom line. While some users may find this difficult due to personal connections or reliance on the platform for business, it’s presented as a necessary step to encourage change.

The overall sentiment expresses deep disappointment and anger at Meta’s decision. It’s not simply a disagreement about online content moderation; it’s a wider concern about the erosion of trust in institutions and the dangers of unchecked misinformation in a society already grappling with polarization and division. The concern isn’t just about Meta’s actions, but the wider implications for the future of online discourse and the role of tech companies in shaping society.