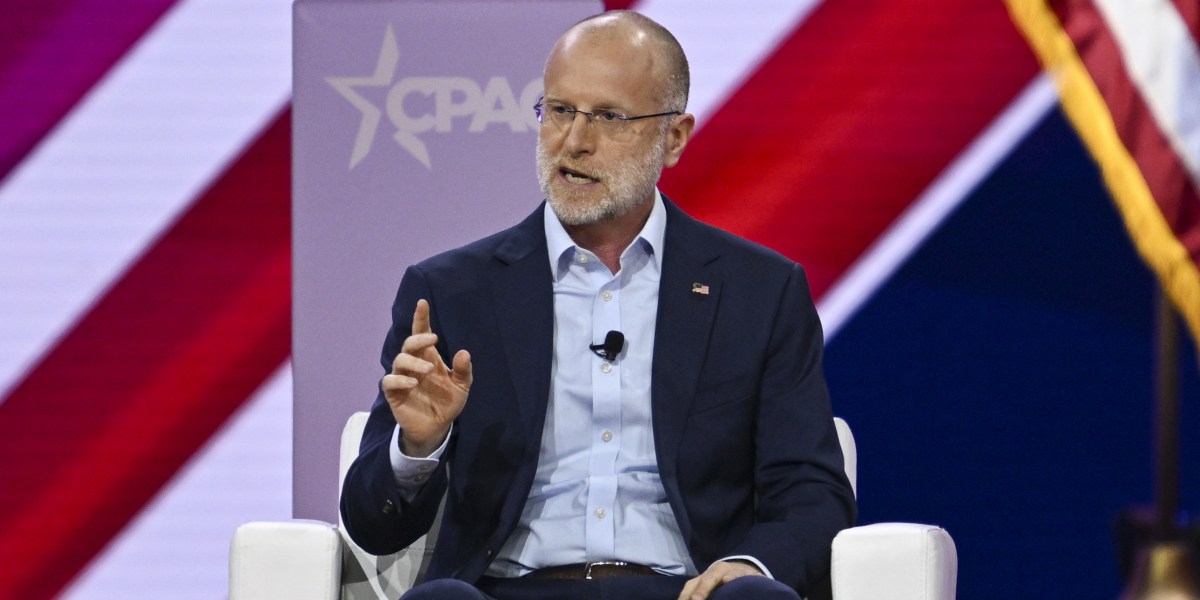

Brendan Carr, President-elect Trump’s FCC nominee and a long-time critic of Big Tech, advocates for repealing Section 230 of the Communications Decency Act. This provision shields online platforms from liability for user-generated content, a point of contention for Carr who believes it creates an unacceptable power imbalance. Carr’s views, detailed in the Heritage Foundation’s Project 2025, align with both Trump’s and Biden’s stated desires to reform or eliminate Section 230, despite differing motivations. Repealing Section 230 enjoys bipartisan support, though legislative progress remains slow.

Read the original article here

Trump’s pick for FCC chair is reportedly pushing to repeal Section 230 of the Communications Decency Act, the law that currently shields social media companies from liability for content posted by their users. This proposed change carries significant implications, potentially reshaping the online landscape in profound and unforeseen ways.

This move would effectively make social media platforms directly responsible for everything posted on their sites. Imagine the sheer volume of lawsuits that could be generated – a seemingly endless stream of litigation stemming from every controversial statement, inaccurate claim, or hateful message. The financial burden alone could cripple these platforms.

The practical implications extend far beyond simple cost. Companies would be forced to implement incredibly stringent content moderation policies, possibly resulting in excessive censorship to avoid legal repercussions. This could lead to a chilling effect on free speech, stifling legitimate discussion and diverse viewpoints. The constant fear of legal action could force platforms to err on the side of caution, removing even innocuous content to minimize risk.

The potential for abuse is alarming. This could easily be weaponized against political opponents or those holding unpopular views. Imagine the flood of strategically filed lawsuits aimed at silencing dissenting voices, effectively functioning as a form of censorship by proxy.

Furthermore, the very business model of many social media companies would become unsustainable. The cost of legal battles, combined with the restrictive moderation necessary to avoid liability, would likely make it financially unviable to operate. Many platforms could be forced to shut down, leading to a dramatic contraction in online communication and information sharing.

This change wouldn’t just affect the major social media giants; smaller platforms and even online forums would be drastically affected. Any site that allows user-generated content would suddenly bear enormous legal risk, potentially forcing many to cease operations to avoid crippling legal costs. This could decimate online communities and platforms for discussion.

Ironically, this proposal, ostensibly aimed at increasing accountability, could paradoxically lead to a decrease in responsibility. With the threat of overwhelming liability, social media companies might resort to blunt, indiscriminate content removal rather than engaging in thoughtful and nuanced moderation. This could lead to a less informed and less engaged public sphere.

The impact on political discourse is particularly troubling. Already fractured and polarized, online political conversations could become even more toxic and restricted. The chilling effect on free expression could further marginalize vulnerable groups and stifle vital public debate.

Some predict this shift could also accelerate the trend toward centralized, state-controlled media. If privately owned platforms are unable to operate under the weight of legal liability, the door could be opened for government-backed alternatives to fill the void.

It’s also worth considering the uneven impact this could have. While the major platforms might have the resources to fight extensive litigation, smaller platforms and startups would likely be overwhelmed. This could exacerbate existing inequalities in online access and representation.

Ultimately, this proposal seems fraught with unintended consequences. While the intent may be to hold social media companies more accountable, the likely outcome appears to be a severely diminished and potentially distorted online landscape, one characterized by censorship, legal battles, and a reduction in online expression and debate. The far-reaching implications of this potential change extend far beyond the immediate headlines and warrant serious and widespread consideration.